Digging Deep Into What is Docker & How It Works

Table of Contents

Traditionally in order to deploy applications/software operations, teams used to procure hardware such as physical servers, networking components, storage drives and then set it up in the on-prem data centers. With the advent of the Cloud, the hurdle of procuring, setting up, and managing physical hardware for creating servers was gone as it enabled us to set up on-demand Infrastructure in the cloud as and when required.

The Existing Problems in Production Environment

Cloud Computing resulted in a paradigm shift in the Software industry and changed the way people build software. It enabled us to craft solutions and provided us with Enterprise-Grade, scalability, and reliability. However, there was one small problem that was in existence for a very long time, and it resulted in bottlenecks while performing deployments in production environments.

It was the dependency of any software on its physical environment. Many times we have observed that a piece of software works fine in a developer’s local environment but does not work or breaks in production environments. Thus dependency of software on the environments used to result in bottlenecks as it resulted in unstable builds in production environments.

How does Docker solve this problem?

Docker provides a runtime environment for deploying our applications in the form of containers. Basically, it is a pre-packaged lightweight virtualized environment, which comprises of only the bare minimum required components that are needed to make sure that the software can function properly. Since it only contains the required libraries it is very lightweight and thus it becomes easy to build, distribute and ship our application in the form of such containers.

Docker helps us by providing a runtime environment for the orchestration and management of these containers. With Docker, we can Build, Distribute and Ship our software in an easy and efficient manner. It also helps us in automating the build and deployment process by offering out of the box integration with various CI/CD tools, secure ways of distributing builds in form of docker images, and also offers enterprise-grade scalability which makes it easy for us to handle a huge amount of traffic into our environment.

Understanding the Docker architecture

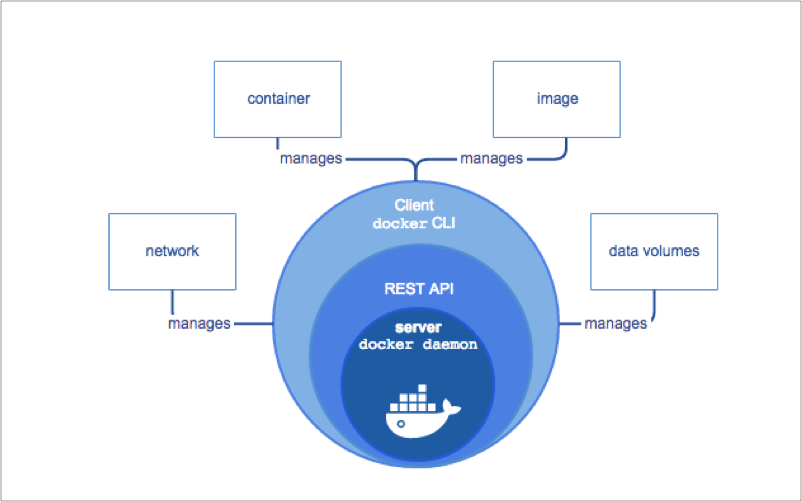

Docker-Architecture

Docker manages the containers with the help of Docker Engine which comprises various components such as Docker CLI which interacts with Docker REST APIs to perform various orchestration tasks thereby making it easy for us to manage docker containers on servers. A core part of Docker Engine is its Docker Daemon which manages all of these tasks in the background such as managing docker images, containers, processes, etc.

Various terminologies related to Docker

1) Docker Containers

It refers to the piece of software/application which is running as an independent process in form of a container, it can be either a piece of a distributed application or it could be an application as a whole. For example: If an application comprises two components, frontend in Angular JS and backend APIs in Node.js and database in MongoDB then it would be hosted in three different docker containers mainly containing the respective application and its required libraries.

2) Docker CLI

It refers to the Command Line Interface of Docker, it can be used to build, distribute and manage docker containers, docker images, volumes, networks, etc basically using Docker CLI we can interact with Docker and it performs all the actions by interacting with Docker’s REST APIs

3) Docker Images

Application / Software is packaged in the form of an image which is used by the docker containers, basically, the docker containers are powered by these docker images as the docker images consist of the application code, required libraries, components, etc.

4) Docker Volumes

In docker, the containers are deployed on the docker engine, hence its storage is not directly linked to the host file system, and containers are a set of processes, hence if we need to store any persistent data, that needs to be saved even after docker containers are stopped or restarted then, in that case, we need to use docker volumes, basically, it creates a bridge between the docker containers and the host file system so that the files can be stored in a persistent manner on the host file system.

5) Docker Networks

Docker containers communicate with the host system with the help of docker networks as it acts as a bridge between the host network and the docker system, it also facilitates the communication between multiple containers and provides a secure medium of communication to transfer data between them.

After understanding how docker works, a very common question that arises in people’s minds is how is it different than the traditional Virtual Machines that were being used, except the fact that docker is lightweight, but there are some major differences between how both of them function which is what makes docker much more efficient than the virtual machines.

Difference between Docker & Virtual Machines

Docker and Virtual machines both function on the concept of virtualization itself but the way in which both of them interact with the host system is different. Docker is installed on top of a host operating system and interacts with the kernel of that operating system for the allocation of resources for the containers.

Docker vs Virtual Machine

A major advantage of docker is that since it communicates with the host operating system, it can request the resources on an on-demand basis as and when required by the containers, this makes it much more efficient than the virtual machines. Why? Because, unlike docker, virtual machines are deployed on top of a hypervisor which performs a hard allocation of the resources to a particular VM and it cannot be resized without interrupting the application.

Another disadvantage of VM is that if the application is not consuming the allocated capacity it kind of gets wasted which reduces the efficiency of the system. On the other hand, in docker, the capacity is gradually distributed among all the containers as and when needed. Restrictions can also be applied to the containers in terms of maximum capacity that can be allocated per container. Hence it makes docker a preferred choice over VM as it provides an easy way to build and distribute applications with enterprise-grade security, scalability, and reliability.

Wish to know more about docker, cloud computing, DevOps? Then get in touch with our expert DevOps engineers to explore how we can transform your business operations.